TOC

Generating TOC...

3.1. The Need for AI-Based API Test Automation and TestLAB AI

Modern development organizations are increasingly required to deliver shorter release cycles and higher quality levels simultaneously. With the spread of microservice architecture and cloud-native environments, APIs have become central to communication between services. As a result, API testing is no longer considered an optional activity for QA teams, but rather an automated verification procedure that must be integrated into CI/CD pipelines.

However, traditional script-based test automation has the following limitations:

- High maintenance costs: When API specifications change, test code may need to be modified repeatedly.

- Limitations of coverage: Tests designed by humans cannot sufficiently cover edge cases or exceptional inputs.

- Decreased productivity: The more time spent on test creation and maintenance, the more negative impact it has on both release speed and quality.

In this situation, test automation utilizing AI, especially LLM, presents new possibilities. This is because it automates repetitive and structured tasks while flexibly reflecting user intent. When applying AI technologies, including LLM, to API test automation, we can expect the following key benefits.

- Reduced maintenance burden

- In an API environment that undergoes frequent changes, automatic generation and patching features reduce repetitive code modification tasks. This helps eliminate testing bottlenecks and reduce release delay risks.

- Example: If the API response that was previously “email” has been changed to “userEmail,” the automatic patch function will automatically modify the relevant verification code.

- Strengthening verification coverage

- LLMs automatically generate a variety of test cases, including boundary values, exception paths, and atypical inputs. This helps improve service stability by covering even cases that are easy for people to overlook.

- Example: Automatically detect when the ‘userId’ field accepts values other than integers, and suggest test cases for that condition.

- Implement agile feedback loops

- Automated tests are quickly executed within the CI/CD pipeline, enabling early detection of potential defects prior to deployment. This reduces urgent revision costs and increases release speed.

- Example: Early detection of missing fields in the new API response format as a test failure just before release.

- Flexibility in reflecting user intent

- Verification requirements can be expressed through natural language prompts. This allows us to flexibly reflect testing objectives without modifying the code.

- Example: Based on the sentence “The createdAt field in the response must be in ISO 8601 format,” the LLM automatically generates the corresponding format validation logic.

- Data accumulation and continuous improvement

- As test execution data, response examples, prompt-code mappings, and other records accumulate, they can be used to optimize prompt templates and automate domain-specific verification. In the long term, it lays the foundation for building a sophisticated test automation environment within the organization.

- Example: By learning combinations of frequently appearing prompts and verification logic, the system can automatically suggest appropriate verification code without explicit prompts in the future.

In the future, AI-based test automation will go beyond simply assisting in test creation and expand to encompass the entire development lifecycle, including requirements analysis, test case inference, and quality risk prediction.

TestLAB AI case study

TestLAB AI is an API testing automation tool that utilizes LLM to automatically generate and maintain verification code based on API request definitions and actual response data.

Key Features of TestLAB

The four core features of TestLAB are summarized below:

Automatic generation of verification code based on response analysis

The response messages collected by actually calling the API request information (endpoint, HTTP method, request header/body examples, etc.) defined in advance are delivered to the LLM. LLM understands the structure and meaning of responses and automatically generates assertion code for the corresponding API call. For example, it can generate test code snippets that include JSON/XML structure analysis, verification of major field types, verification of the existence of required fields, verification of nested structures, and verification of error response formats. This saves QA engineers time spent writing repetitive standard verification logic (type checks, required fields, range checks, etc.).

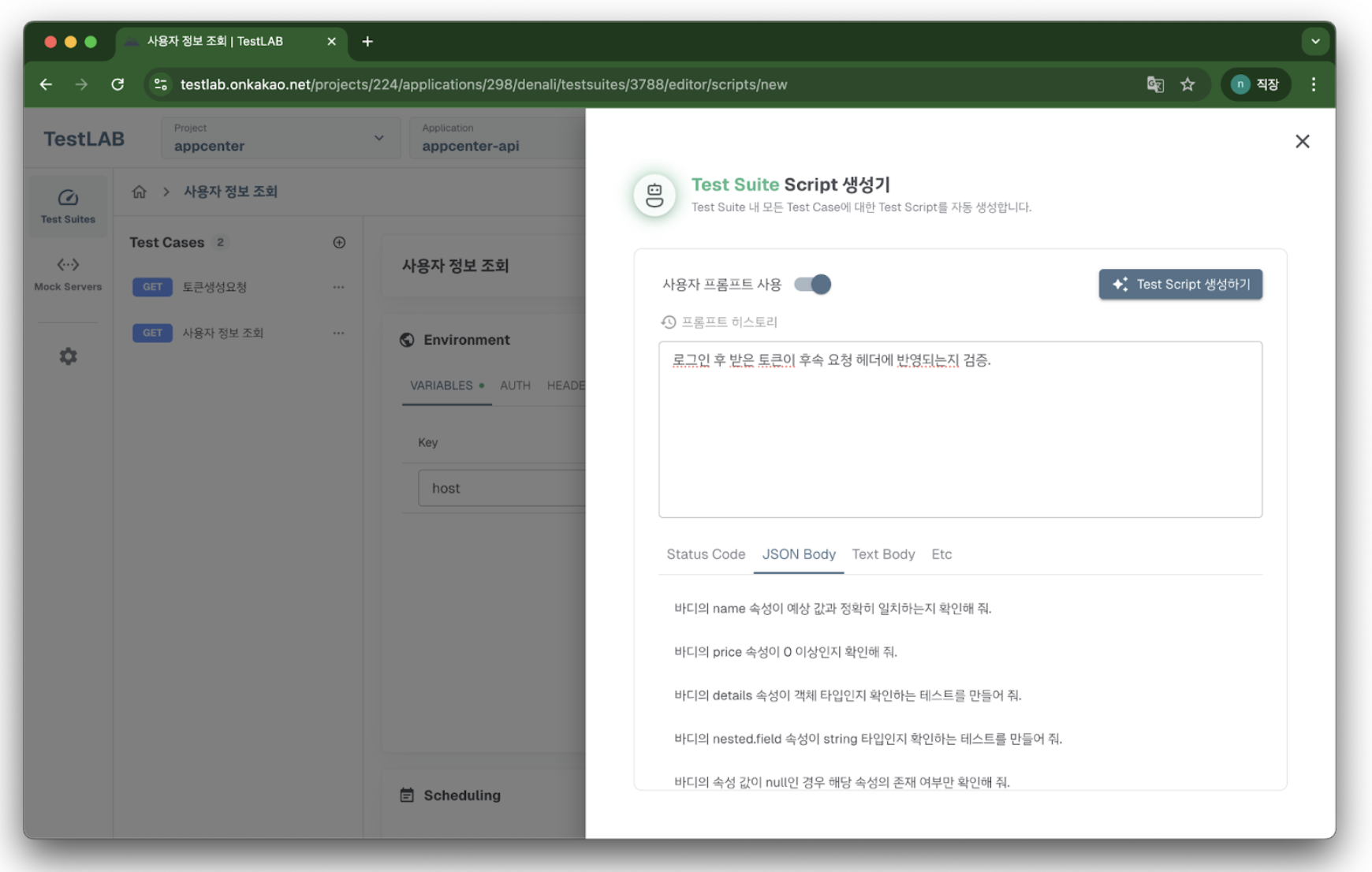

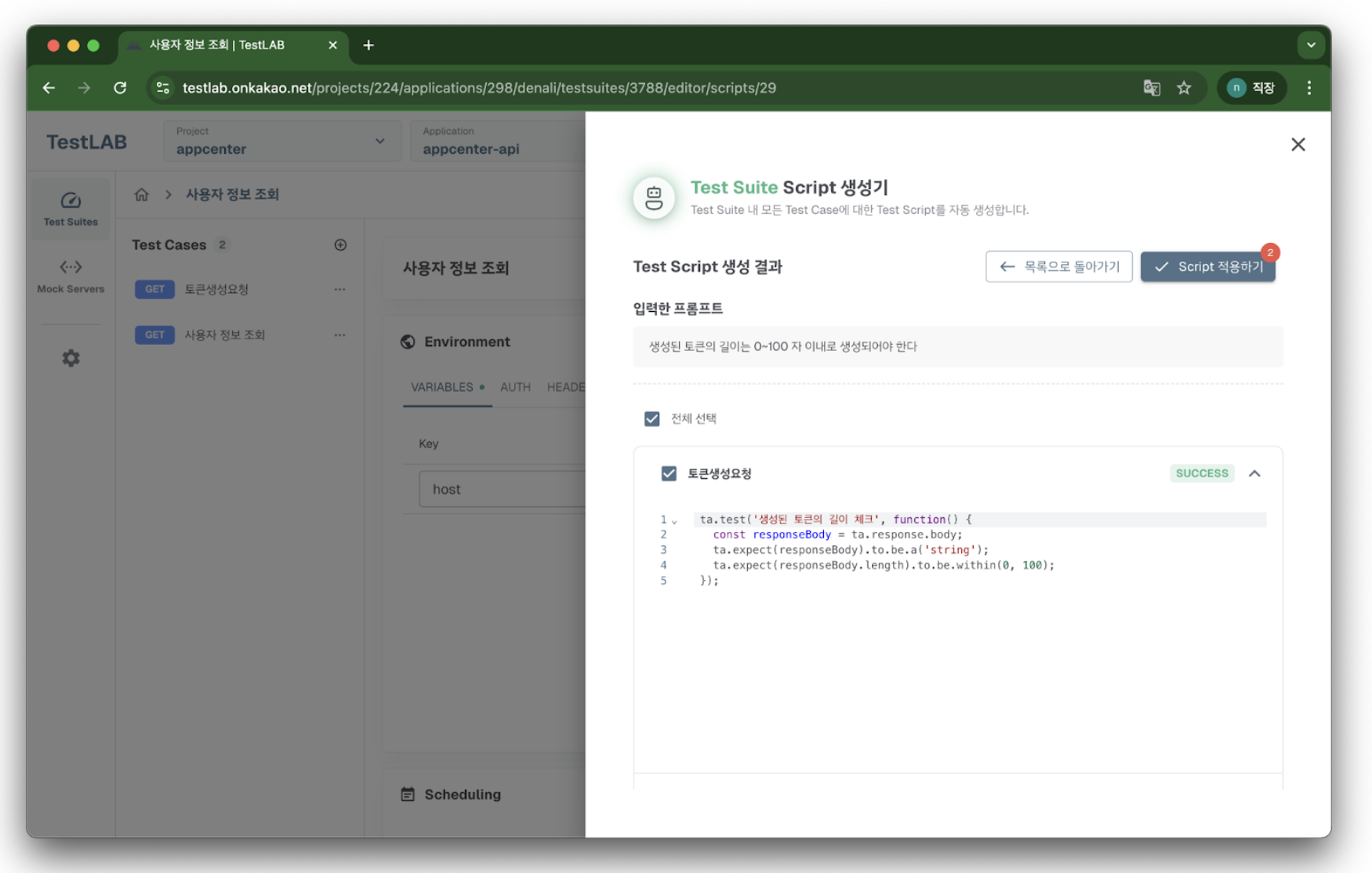

Reflecting user intent based on user prompts

When QA engineers or developers enter specific verification intentions in natural language, such as “This field must be in the range of 0 to 100” or “The createdAt field of the response must follow the ISO 8601 UTC format,” TestLAB’s internal prompt engine transmits this to the LLM to generate customized verification code.

Establishing standard prompt templates will increase consistency and accuracy. For example, by defining templates for each verification scenario, such as “minimum/maximum check for number fields” and “check for the existence of specific keys in response objects,” we can quickly create prompts for each API according to the corresponding structure.

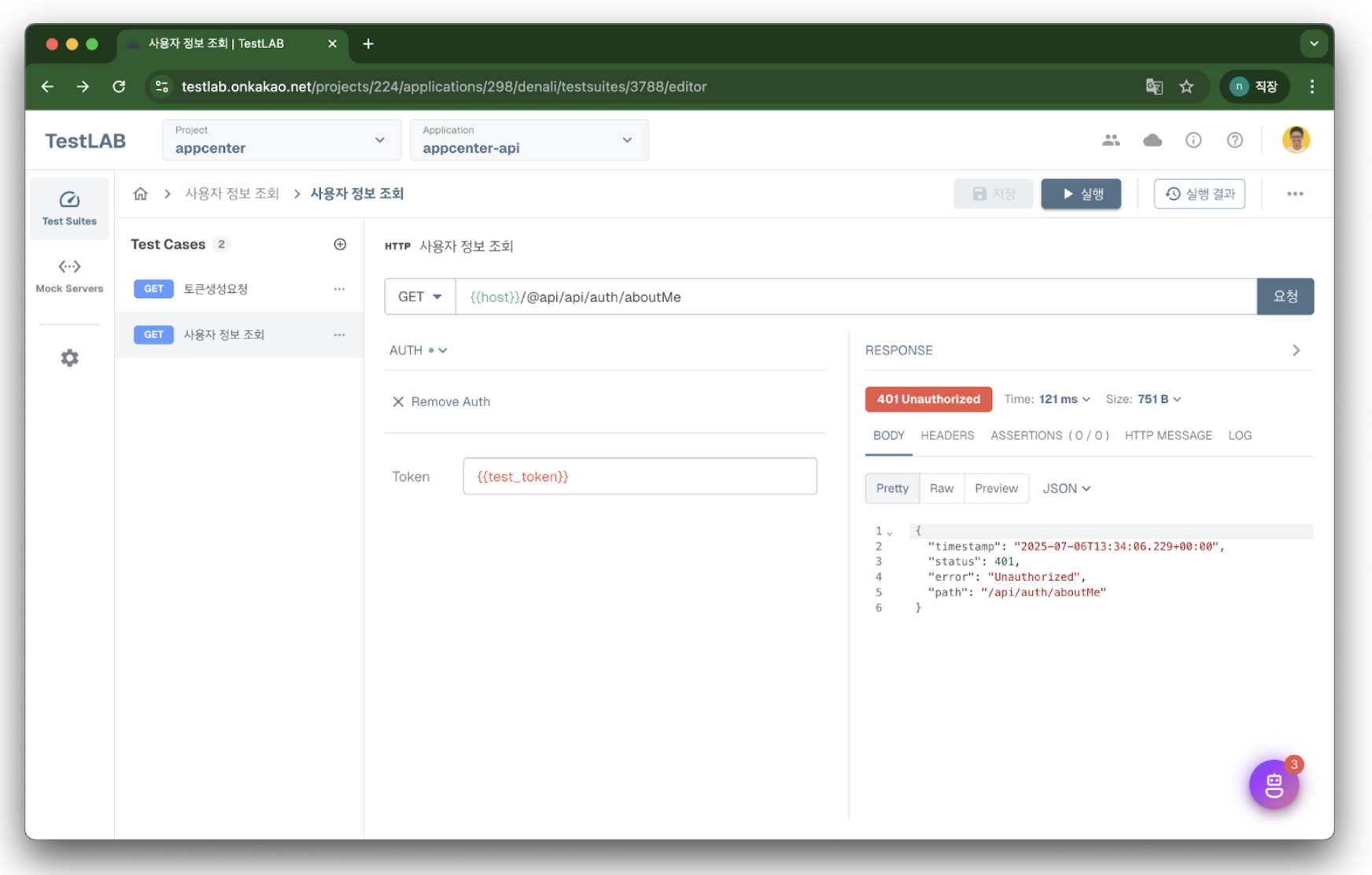

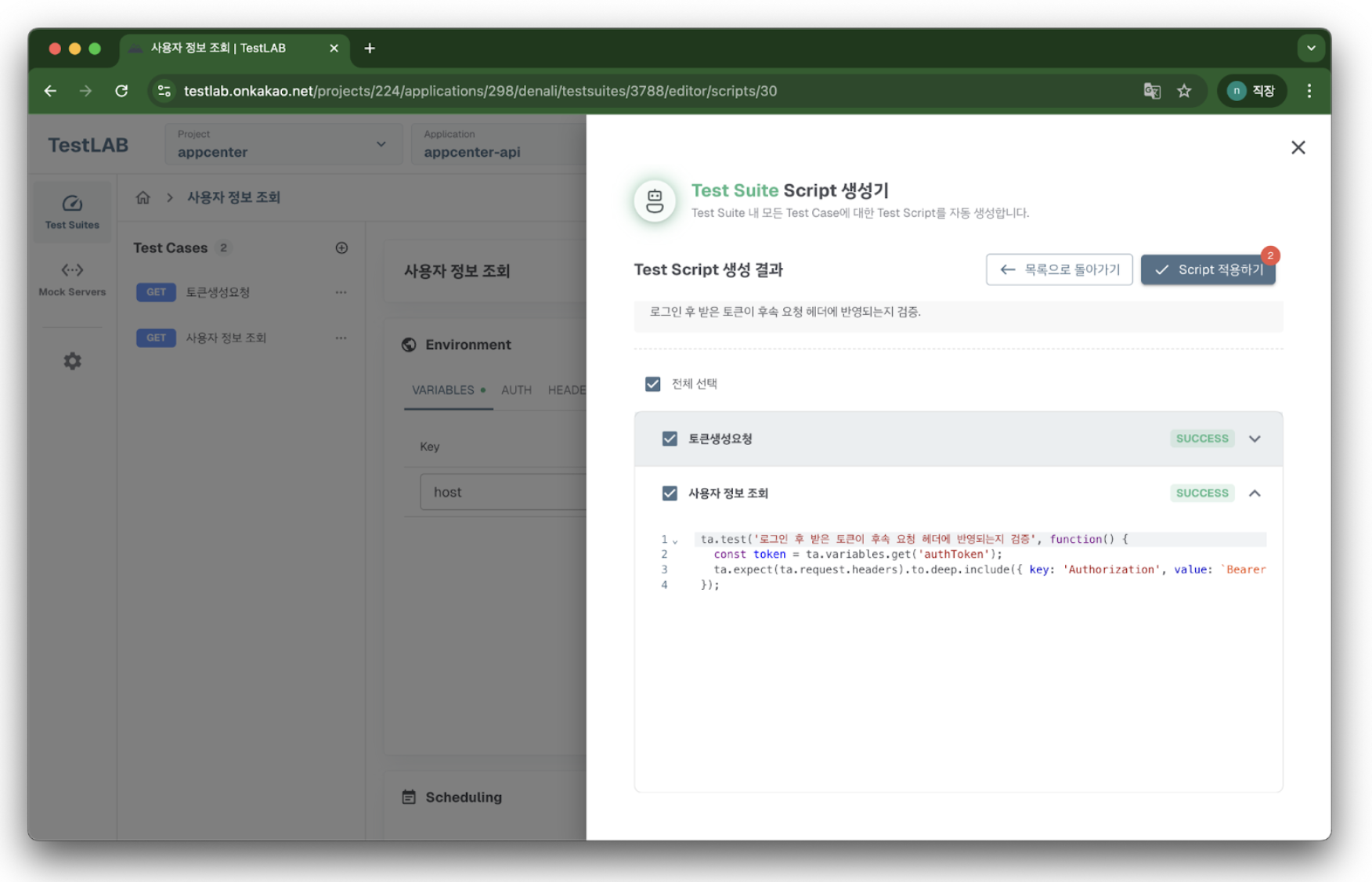

Support for individual requests and scenario-based creation

TestLAB provides not only verification code for single API calls, but also workflows that combine multiple API calls (e.g., Login → Create Resource → View → Delete). At this point, it automatically generates logic that maps the data obtained from the response of the previous call to variables for use in the next request, while also verifying the success/failure conditions for the entire scenario. For example, code is automatically generated to reflect the token received by the login API in the header to verify subsequent calls, or to use the generated resource ID to configure the query and delete API verification flow.

Verification code maintenance support

When API specifications change, re-enter the changed request definitions and new response examples into TestLAB, and QA engineers will provide prompts such as “Field name has changed, please update verification logic” so that LLM can automatically patch existing test code or suggest new test code. This process includes mapping changed field names, modifying nested field access methods due to structural changes, and creating validation logic for newly added response fields. Once QA has completed final review and reflected the changes in TestLAB, the test pipeline will remain up to date without interruption.

TestLAB AI Flow

The following is a typical workflow for operating TestLAB AI:

Collect and prepare API request definitions

- Upload REST/OpenAPI specifications, Postman collections, or manually defined request templates to TestLAB.

- Set header and body examples, authentication methods, and environment variables (staging and development environment endpoints, etc.) for each request.

- At this stage, we should prepare request examples that are as accurate and realistic as possible to improve the quality of response example collection and verification code generation.

Response execution and examples

- TestLAB calls the uploaded request definitions and collects successful and error case response messages.

- We use staging environments and virtual (mock) servers to ensure responses to various error scenarios, such as authentication failures and parameter omissions.

- When sufficient normal/error/edge case responses are secured, LLM can accurately understand the response structure and generate comprehensive verification code.

Writing user prompts

- QA engineers or developers enter verification intentions in natural language. Example:

- “The status code must be 200 or 404.”

- “Verify that the createdAt field is in ISO 8601 UTC format.”

- Verify that the token received after logging in is reflected in the subsequent request header.

- By establishing standard prompt templates, we can improve accuracy and ensure consistency.

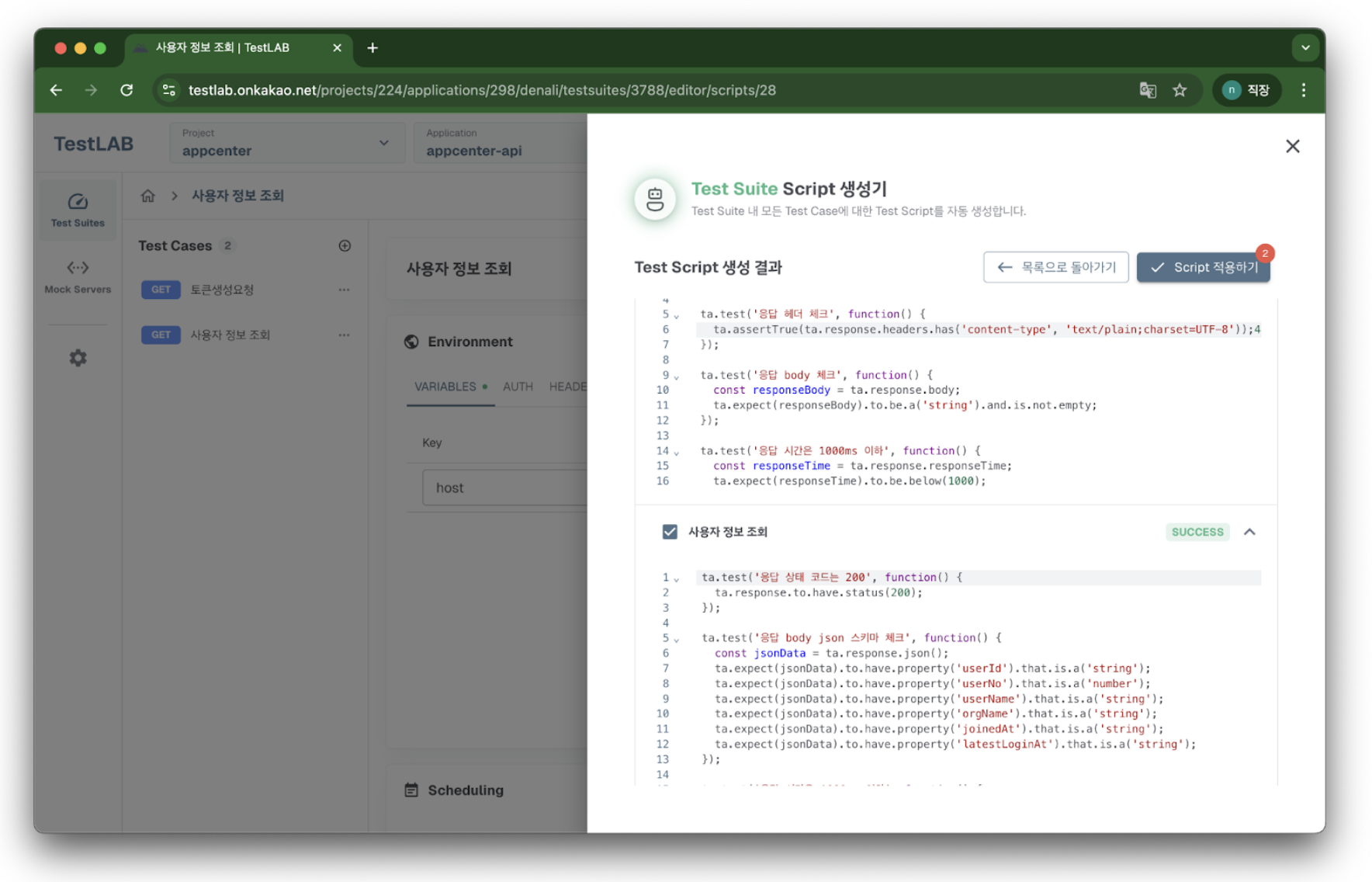

LLM-based verification code generation

- TestLAB combines the collected response examples and prompts and sends them to the LLM.

- LLM responds with test code snippets that reflect JSON/XML structure analysis, type verification, field existence, range checks, exception path verification, and more.

- The generated code can be previewed in the TestLAB UI, and if the results differ from our intentions, we can enter additional prompts to regenerate it.

Verification code review and integration

- QA engineers review automatically generated code to ensure that there are no discrepancies with the intended purpose.

- Reflect the reviewed code in TestLAB, integrate it into the CI/CD pipeline, and set it to run periodically.

- After that, we monitor the test results (success/failure, response time, error messages, etc.), analyze failure patterns repeatedly, and make improvements.

Responding to specification changes and maintaining continuity

- When the API spec changes, reflect the changed request definitions and new response examples in TestLAB.

- When a QA engineer provides a prompt such as “The field name has been changed, please update the verification logic,” TestLAB uses LLM to automatically patch existing code or propose new code.

- Once QA has completed final review and reflected the results in TestLAB, the test pipeline continues without interruption, maintaining the latest verification logic.

Benefits gained from building and utilizing TestLAB AI

Here are the key benefits gained through the establishment and utilization of TestLAB AI:

- Improve productivity in writing verification code

- Initial test code writing time has been reduced by automatically generating standard verification logic such as repetitive type verification, required field checks, and range checks. QA engineers can now focus on more high-level checks and analysis, such as complex scenario design and non-functional test reviews, as the burden of writing basic assertions¹ has been reduced. For example, when launching a new API, TestLAB automatically generates basic verification code, which significantly reduces the time required compared to traditional manual work.

- Ensuring comprehensive coverage

- By generating verification code based on error and edge case responses as well as normal cases, boundary value and exception path verification, which are easy for humans to overlook, are naturally included. This has enabled us to detect potential errors that may occur in the production environment in advance, thereby improving service stability.

- Prompt-based flexibility

- Natural language prompts enable immediate reflection of detailed verification requirements, allowing us to quickly update test verification code when API specifications change or business logic changes. Even in situations that require urgent fixes, we can prepare verification logic within a few hours by simply adjusting the prompt and reflect it in the CI/CD pipeline.

- Support for scenario-based complex verification

- The feature that automatically configures workflow tests combining multiple API calls, such as the flow of login→resource creation→view→delete, can significantly reduce the time required to write E2E scenario tests. This enables us to run comprehensive workflow verification more frequently, detect potential defects early, and contribute to release stability.

- Reduced maintenance burden

- In environments where API specifications change frequently, TestLAB’s automatic patching and new code suggestion features can reduce the time spent modifying test code. Especially in systems that undergo frequent changes, such as microservice environments, these features help prevent release delays and maintain consistency in the test pipeline.

- Expected improvements in test stability and release speed

- By integrating the verification code generated by TestLAB into the CI/CD pipeline for automatic execution, comprehensive verification can be performed more quickly, which is expected to increase the rate of early detection of potential defects. As a result, we expect to see an increase in the discovery of major defects prior to deployment, a decrease in the frequency of production failures, and a shortening of the regression test cycle, leading to improved release speed.

- Expectations for QA inspection workflow enhancement

- By designing a workflow that allows for quick feedback on verification and improvements through a QA review stage, even for automatically generated code, we expect that the accuracy of prompt templates and LLM integration will improve over time. Although initial inspections may take some time, we expect a virtuous cycle in which the accuracy of results improves through repeated feedback and inspection overhead gradually decreases.

- Expectations for accumulation of learning data and establishment of internal optimization

- By systematically storing response examples uploaded to TestLAB and their corresponding prompt-verification code mapping history, we can establish a data-driven foundation that can be leveraged for future organizational initiatives such as prompt template refinement or fine-tuning of internal LLMs. This makes it possible to reduce dependence on external models and evolve to a customized test automation environment that reflects domain-specific response patterns and security and compliance rules.

AI-based API test automation goes beyond a simple test authoring tool to become a turning point that organically connects each stage of the test lifecycle. TestLAB AI plans to explore the following directions for future evolution:

- Operating our own in-house model: In-house LLM-based operations instead of external APIs for sensitive data protection and improved response speed

- Test Oracle Automation: Implementation of anomaly detection verification based on past response patterns without explicit correct answers

- Collaboration-centric test design: Establish a collaborative testing environment where QA, developers, and planners participate in prompt creation and review.

- Support for various quality attributes: Extend AI-based automation beyond functional verification to performance, security, and contract-based testing.

Footnotes

1) An assertion located at a specific point in a program is a logical expression that represents a condition the developer expects to always be true at that point. If the assertion is violated (i.e., the expression evaluates to false), it implies that there is a bug or some other issue in the program.