TOC

Generating TOC...

2.4. AI ethical principles and AI risk management framework

As AI technology becomes more and more embedded in our lives and work, social demands and corporate responsibility for whether the results produced by AI are ethical, fair, and reliable are also growing more than ever. For example, the concern that AI models can learn or amplify biases inherent in learning data and cause discriminatory results is an important issue that must be addressed.

Kakao has created a framework for everyone to enjoy the benefits of AI technology fairly, established AI ethical principles so that AI can have a positive impact on human society, and manage risks that may occur during AI development and use.

Establishing and spreading AI ethical principles

In order to create a culture of responsible AI development and utilization, Kakao has distributed the “Kakao Group Guidelines for Responsible AI” and is building and operating an internal implementation system based on this.

Kakao Group’s guidelines for responsible AI

Kakao presents the following 10 AI ethical principles as a promise to create a better world together with society.

- Social ethics: We conduct all AI-related efforts within our social ethics framework, pursuing the benefit and happiness of humanity through this.

- Inclusiveness: We recognize the provision of AI technology without alienation as an important responsibility of digital companies, and pursue an environment where all users can use community AI technology equally regardless of age or disability. The community strives to make AI technology and services inclusive of our society as a whole.

- Human rights: We aim to fulfill the responsibility of respecting human rights throughout the development and use of AI technology. We strive to prepare prevention and response measures for situations where stakeholders’ rights are not guaranteed due to AI technology.

- Non-discrimination and non-bias: AI is wary of causing discrimination or making biased decisions based on gender, race, origin, religion, etc.

- Transparency: Users are continuously informed about the purpose of use, usage details, and risk factors of AI technology within the service. To this end, relevant content is explained in good faith to the extent that it does not impair corporate competitiveness.

- Security, safety, and independence: We establish a security system that complies with legal requirements so that AI systems and data can be defended from risks such as external attacks and malicious use. We strive to provide stable services by establishing safety measures and response systems for abnormal operation of AI systems and unexpected situations. We strictly manage AI so that it is not damaged or affected by external or internal factors.

- Privacy: We protect user privacy throughout the development and use of AI technology. In order to minimize the misuse of personal information, we establish personal information protection procedures and take preventive and countermeasure measures in accordance with relevant laws and regulations.

- User protection: AI technology efficiently reflects user protection policies required by various laws and regulations. We strive to establish safe prevention procedures to prevent infringement of users’ rights and interests, and continue to check whether relevant procedures work properly thereafter.

- The boundary of dysfunction: We acknowledge the imperfections of AI technology and prepare for possible social consequences that differ from design intentions. If necessary, we work with relevant experts to find solutions.

- User subjectivity: In the process of interaction between humans and AI, we strive to prevent humans from becoming excessively dependent on AI and use that weakens the subject’s autonomy or threatens physical, mental, and social safety.

Operation of the Group Technical Ethics Subcommittee

We operate a control tower mechanism that proactively checks, responds to, and manages potential risks that may occur during the Kakao Group’s AI development and use.

Gather a list of key checklists for secure AI

After dividing the AI technology and service development stages into 4 stages (1) planning and design, (2) data collection and processing, (3) AI model development/planning and implementation, and (4) operation and monitoring, it is checked whether all 7 items, including bias and transparency covered by AI ethics principles, were fully considered during the development process.

Providing guidance on the development and use of in-house generated AI

This is a minimal guideline to reduce the uncertainty of members using generative AI within the company. It explains the risks associated with using generative AI services and matters to keep in mind when using the service.

Strengthening AI ethics education for all employees

We conduct regular training and workshops so that all members can correctly understand the social and institutional impact of AI technology and make correct decisions when faced with ethical issues in actual work.

Real-world case-based learning and improvement

Kakao regards ethical issues that have arisen during actual service development and operation as important learning assets.

Kakao AI Safety Initiative

The Kakao AI Safety Initiative (hereafter Kakao ASI) is a comprehensive guideline to minimize risks that may occur during the development and deployment of AI technology and to establish an ethical and safe AI system. It is applied throughout the entire life cycle of an AI system, from design to development, testing, deployment, monitoring, and updating.

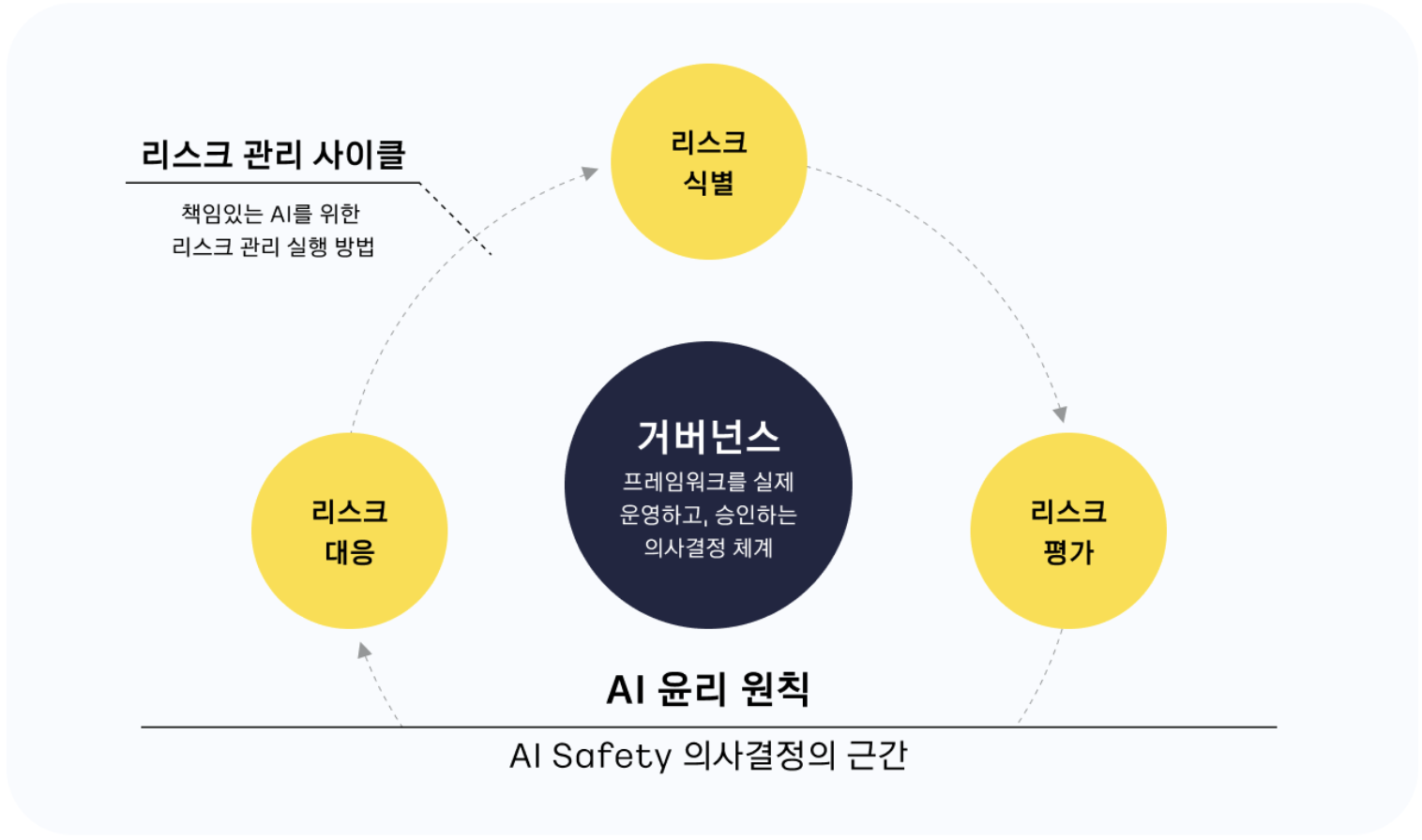

Kakao ASI consists of a total of 3 key elements: AI ethics principles, risk management cycle, and AI risk governance. Of these, the remaining 2 core elements other than the AI ethics principles described above are as follows:

Risk management cycle

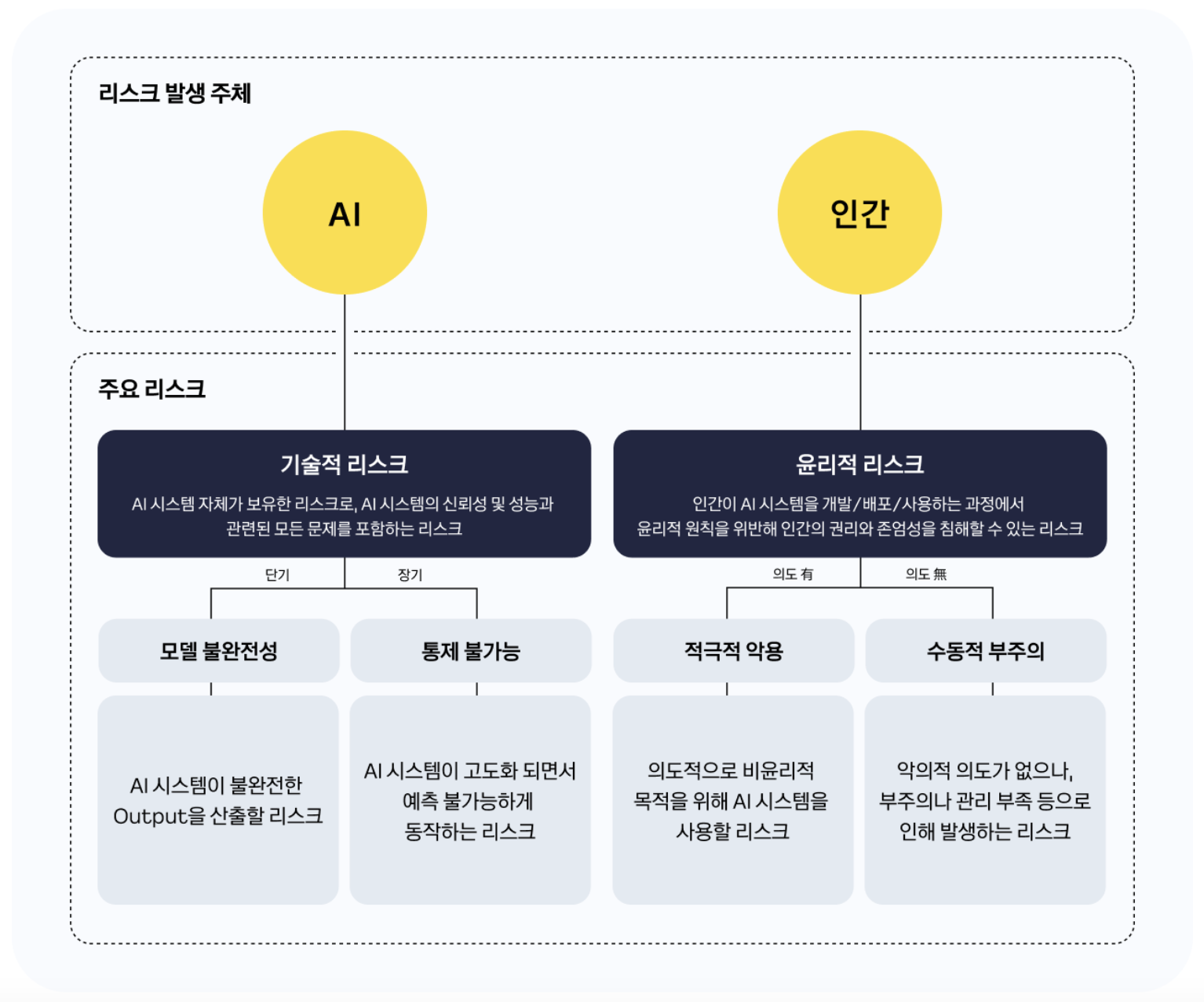

This is a process of managing potential risks in AI systems, and consists of risk identification, assessment, and response steps. Here, risk is divided into technical risk and ethical risk depending on whether the entity that generated it is AI or a human. By defining the characteristics of each risk and applying an accurate assessment for each characteristic, it is possible to clearly set risk priorities and prepare response strategies quickly and efficiently accordingly.

AI risk governance

Kakao ASI governance is a decision-making system to ensure the safe and responsible development and use of AI systems. It is organized into AI Safety, an organization dedicated to AI safety, an organization dedicated to company-wide risk management, and a three-level management structure to review AI risks from various angles and carry out procedures to verify the appropriateness of evaluation results and countermeasures.